Navigating AI: A Parent’s Guide to Children and Artificial Intelligence

- Choose your language in the button. Some translations may be flawed or inaccurate.

- The articles on the website «Kids and media are written in simple Norwegian, for those who are new to the language.

- This article with English text

Artificial intelligence is often shortened to AI in English and KI in Norwegian.

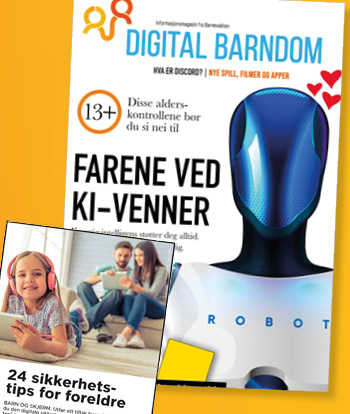

Advice for parents about artificial intelligence

Don’t reveal secrets. When your child interacts with artificial intelligence, it collects personal information. This information can be used to display advertisements or to improve the artificial intelligence. Employees may also read the conversations your child has with the AI to learn how the it can be programmed better. It is difficult to know to what extent the conversations are stored or used without reading all the fine print in the terms of the various AIs.

Can give dangerous advice: Artificial intelligence can provide dangerous advice in areas like health, finance, and similar fields. Children may not have enough life experience or general knowledge to understand that a piece of advice might be dangerous or bad. Artificial intelligence can also fabricate facts that are not true at all.

The age limit is often 18 years: The technology that allows conversations with artificial intelligence is still quite new. Developers have implemented several ethical guidelines for the AI to follow, but the AI is so complex that one cannot be sure of how it might respond to users. The age limit is usually 18 years according to the terms and conditions.

Artificial intelligence can be safer than traditional searches: Developers have implemented ethical guidelines that AIs must follow. With traditional searches, children can end up on terrible websites that contain factual errors, bad attitudes, poor advice, and so on. Artificial intelligence has become quite good at presenting both sides of an issue or advising caution. In this way, one can argue that AIs are safer than regular surfing or searching on the internet.

Instances of discriminatory and racist behaviour: On the other hand, artificial intelligence can also be equipped with poor ethical guidelines from the developers’ side. For example, in February 2024, Google’s artificial intelligence Gemini made it very difficult for users to get images of people with light skin. If you asked the AI to create an image of a «black» person, it did so willingly. If you asked it to create an image of a «white» person, it refused and gave a mild moral lecture against such requests. The AI was thus racist. There were so many complaints that Google disabled the ability to create images of people while developers sat down to write new codes, i.e., new ethics.